Focus on Integration Tests Instead of Mock-Based Tests

Posted on Apr 1, 2019. Updated on Dec 18, 2022

Testing classes in isolation and with mocks is popular. But those tests have drawbacks like painful refactorings and the untested integration of the real objects. Fortunately, it’s easy to write integration tests that hit all layers. This way, we are finally testing the behavior instead of the implementation. This post covers concrete code snippets, performance tips and technologies like Spring, JUnit5, Testcontainers, MockWebServer, and AssertJ for easily writing integration tests. Let’s discover integration tests as the sweet spot of testing.

TL;DR

- Traditional mock-based unit tests are testing classes in isolation. Drawbacks:

- We don’t test if the classes are working together correctly.

- Painful Refactorings.

- Instead, focus more on integration tests in which we wire the real objects together and write a single test that hits all layers. Advantages:

- We test behavior instead of an implementation.

- We test much closer to reality because in production the application will also use the real objects.

- Higher test coverage as we test both the parts and everything as a whole.

- Refactorings in the internal structure are less likely to break your tests.

- Test against the real database (via testcontainers) instead of an in-memory-database to be even closer to production.

- For me, the proposed integration tests are the sweet spot of testing. They are a good compromise between setup effort and production-closeness.

- If you don’t like to use the term “integration test” for the proposed testing style you may prefer the term “component test”.

An Example

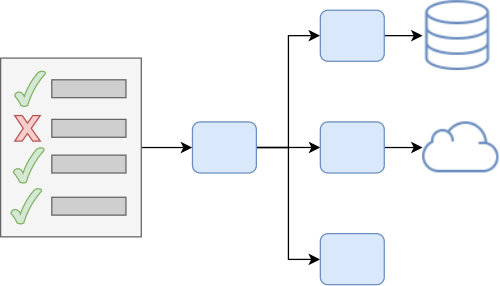

Let’s assume we like to write the HTTP resource /products. This resource retrieves the product data from a database, requests tax information from a remote service and executes some price calculation logic. Our class composition might look like this:

The class composition we like to test. Multiple classes, a database, and a remote system are involved.

Isolated Mock-Based Unit Tests

A typical solution for testing is to test every class in isolation. Many XUnit books are advising this:

“Test your classes in isolation and control its dependencies with mocks or stubs”.

So we end up with four tests:

Four unit tests for testing each class in isolation and with mocks.

What do we have here?

ProductControllerTest: In order to test theProductControllerin isolation, we have to mock all used classes: theProductDAO, theTaxServiceClient, and thePriceCalculatorMock.- The

ProductDAOTesttests the database access logic in theProductDAO. A popular approach is to use in-memory databases like H2 or Fongo. - The

TaxServiceClienttests the remote call logic in theTaxServiceClient. Often, we use some kind of mock server like MockWebServer or WireMock. - The

PriceCalculatorTestis straight-forward as thePriceCalculatordoesn’t have any dependencies.

This approach is okay. However, it has some drawbacks:

- Unreliable tests and limited test scope. Green mock-based unit tests don’t mean that our service works correctly in production because we never test if the real classes are working together correctly. We are not testing close enough to reality. I experienced many times that mistakes are happening exactly in the integration of the real classes. For instance, the

ProductDAOmay returnnullif no product was found, but theTaxServiceClientexpect that the product is notnull. It’s easy to forget to write those unit tests. - Painful Refactorings. Mock-based tests are tightly coupled to the implementation of the application. Therefore, changing this implementation leads to broken tests and a lot of work to fix these tests. Refactorings can become a horrible nightmare. Imagine, we change the internal data structure

ProductEntitythat is returned from theProductDAOback to theProductControllerand then passed on to theTaxServiceClientand thePriceCalculator. Most likely, all tests will not compile anymore and we have to adapt them all. - Laborious. In general, we have to write and maintain multiple test classes.

- In-Memory Database != Production Database. Using an in-memory database for tests also reduces the reliability and scope of our tests. The in-memory database and the database used in production behave differently and may return different results. So a green in-memory-database-based test is absolutely no guaranty for the correct behavior of your application in production. Moreover, you can easily run into situations where you can’t use (or test) a certain (database-specific) feature because the in-memory database doesn’t support it or act differently. For details on this, check out the post ‘Don’t use In-Memory Databases (H2, Fongo) for Tests’.

Integration Tests

What is the solution?

One integration test to rule them all

- We write a single integration test which tests all four classes together. So we only have one

ProductControllerIntegrationTestthat tests theProductControllerwhich is wired together with the realProductDAO,TaxServiceClientand thePriceCalculator. - We start the production database in a docker container and configure the wired

ProductDAOwith the container’s address. The library Testcontainers provides an awesome Java API for managing container directly in the test code. - The responses of the remote tax service are the only thing left which have to be mocked.

What have we achieved?

- Accurate, meaningful and production-close tests. We test all classes and layers together and in the same way as in production. Bugs in the integration of the classes are much more likely to be detected. Thus, we are testing closer to reality and a green test is much more meaningful.

- Robust against refactorings. Integration tests are less likely to break when we do refactorings like extracting code to new methods or classes or changing the internal data structure that is passed around. We are now testing behavior and focusing on the input and output, which should not change after a refactoring of the application’s internals. Besides, integration tests are so powerful because we can immediately see if an internal refactoring broke something. Due to our experience, this is a huge relief.

- One test class to write. Ideally, we get along with a single integration test. Sure, the initial wiring and data creation for integration tests take more effort, but we only have to do it once. However, the world is not black and white. You can still write unit tests in addition to the integration tests. But you might end up testing the same things multiple times.

- Testing against the production database. The tests are even more meaningful because we are testing against the real database in the same version as in production. If a query succeeds in the tests, it will also in production. Moreover, you can use every database-specific feature and test it properly.

- Easy setup and execution. Although we are doing integration testing against a real database, the setup is easy, because we can do the complete setup in the test class using Java code. There is no need to deploy the application to a certain staging environment and execute a dedicated test suite against this deployment. Moreover, we execute these integration tests like a normal unit test during the test phase of our build. No special treatment.

All in all, we are finally testing the behavior and not the implementation.

- Do you really have to test if a method throws a certain exception? No, the expected behavior following this exception is much more important. For instance, that a proper error response is returned to the client or that no data has been changed in the database. This is what matters. The exception is just an implementation detail to achieve this.

- Do you really have to test if a certain intermediate data structure is returned by a method? No. Again, only the final output matters (like a certain server response or a new entry in the database).

Let’s take a look at some code:

@TestInstance(TestInstance.Lifecycle.PER_CLASS)

public class ProductControllerITest {

private MockWebServer taxService;

private JdbcTemplate template;

private MockMvc client;

@BeforeAll

public void setup() throws IOException {

// ProductDAO

PostgreSQLContainer db = new PostgreSQLContainer("postgres:11.2-alpine");

db.start();

DataSource dataSource = DataSourceBuilder.create()

.driverClassName("org.postgresql.Driver")

.username(db.getUsername())

.password(db.getPassword())

.url(db.getJdbcUrl())

.build();

this.template = new JdbcTemplate(dataSource);

SchemaCreator.createSchema(template);

ProductDAO dao = new ProductDAO(template);

// TaxServiceClient

this.taxService = new MockWebServer();

taxService.start();

TaxServiceClient client = new TaxServiceClient(taxService.url("").toString());

// PriceCalculator

PriceCalculator calculator = new PriceCalculator();

// ProductController

ProductController controller = new ProductController(dao, client, calculator);

this.client = MockMvcBuilders.standaloneSetup(controller).build();

}

@Test

public void databaseDataIsCorrectlyReturned() throws Exception {

insertIntoDatabase(

new ProductEntity().setId("90").setName("Envelope"),

new ProductEntity().setId("50").setName("Pen")

);

taxService.enqueue(new MockResponse()

.setResponseCode(200)

.setBody(toJson(new TaxServiceResponseDTO(Locale.GERMANY, 0.19)))

);

String responseJson = client.perform(get("/products"))

.andExpect(status().is(200))

.andReturn().getResponse().getContentAsString();

assertThat(toDTOs(responseJson)).containsOnly(

new ProductDTO().setId("90").setName("Envelope").setPrice(0.5),

new ProductDTO().setId("50").setName("Pen").setPrice(0.5)

);

}

}

Complete source code of the ProductControllerITest

Challenges and Reservations

Execution Speed

“Those integration tests take more time which slows down the feedback cycle.”

It doesn’t matter if you are wiring the real objects or mocks together. The execution time is as fast as with mocks - sometimes even faster because creating concrete objects is faster than creating mocks. However, there are two points that can take more time:

- Bootstrapping the (Spring) DI framework. It’s true that this takes some seconds.

- That’s why I usually don’t use DI in my integration tests. I instantiate the required objects manually by calling

newand plump them together. If you are using constructor injection, this is very easy. The drawback is that we don’t test the DI configuration anymore (and are less close to the production). However, most of the time you want to test the business logic you have wrote. For this, you don’t need DI. - Moreover, Spring Boot 2.2 will introduce an easy way to use lazy bean initialization, which should significantly speed up DI-based tests.

- That’s why I usually don’t use DI in my integration tests. I instantiate the required objects manually by calling

- Container startup time. This is something you can’t avoid. Starting a real database is slower than an in-memory-databases. You trade execution speed for accuracy, which is a good deal for me. Still, there are two things you can do:

- Start the container once and reuse it for all tests in the test suite. So you only have to wait once. In Java, you can use a

staticfield for this. In Kotlin, I recommend using anobjectsingleton and alazy {}initialized property. - Starting the database again and again during the iterative development of a test is annoying. Therefore, I start the database once via docker-compose and point my tests to this running database. Only if no such local database is running, I start a new one with Testcontainers. The necessary switch is simple:

- Start the container once and reuse it for all tests in the test suite. So you only have to wait once. In Java, you can use a

private DataSource createDataSourceAndStartDatabaseIfNecessary() {

DataSourceBuilder<?> builder = DataSourceBuilder.create().driverClassName("org.postgresql.Driver");

try {

// e.g. if started once via `docker-compose up`. see docker-compose.yml.

Socket socket = new Socket();

socket.connect(new InetSocketAddress("localhost", 5432), 100);

socket.close();

return builder.username("postgres").password("password")

.url("jdbc:postgresql://localhost:5432/")

.build();

} catch (Exception ex) {

PostgreSQLContainer db = new PostgreSQLContainer("postgres:11.2-alpine");

db.start();

return builder.username(db.getUsername()).password(db.getPassword())

.url(db.getJdbcUrl())

.build();

}

}

If you don’t like this automatism, you can also consider to introduce a system property for this.

# docker-compose.yml

version: '3.1'

services:

db:

image: "postgres:11.2-alpine"

environment:

POSTGRES_PASSWORD: password

ports:

- "5432:5432"

docker-compose up

- Always add the VM options

-noverify -XX:TieredStopAtLevel=1to your run configurations. It will save 1 - 2 seconds for each execution. You can add them to the “JUnit” run configuration template in IntelliJ IDEA. So you don’t have to add them to each new run configuration manually.

More Effort for Input Data Creation and Output Data Assertions

“In integration tests, creating the input data objects and the expected output object for the assertions is laborious.”

That’s true, there is more data to set up and to check at the end, which can bloat the test code. But there are solutions for this:

- Intensively use helper functions.

- Write the method

createProductEntity(id, name, amount)which creates aProductEntityusing the three passed values. The remaining product values are set to reasonable default values. This way, you can control values that are relevant for the tests but prevent to distract the reader with irrelevant values. This highly improves the readability of the test code. In reality, you often need multiple versions of these functions because each test is interested in different values. In Kotlin, default arguments are an extremely powerful feature for this. In Java, you have to use method chaining and overloading to simulate default arguments. - Writing

Instant.ofEpochSecond(1)ornew ObjectId("000000000000000001")again and again bloats the test code. Consider to write small helper functions liketoInstant(1)ortoId("1")for this. Kotlin’s extension functions can increase the readability even more (1.toInstant(),"1".toId()). Every small portion of saved code helps. - Vararg parameters are also very handy to concisely pass multiple objects to a helper function. Just write an

insert(ProductEntity... entities)method that takes one or multipleProductEntityobjects and inserts them into the database.

- Write the method

- Utilize Parameterized Tests. This way, you can avoid writing multiple tests that are doing similar things. Just write one test and use parameters for the possible variations of the data.

- AssertJ provides powerful assertions that let you write complex assertions with little code. Some examples are:

assertThat(actualProductList).containsExactly(

createProductDTO("1", "Smartphone", 250.00),

createProductDTO("1", "Smartphone", 250.00)

);

assertThat(actualProductList).anySatisfy(product -> {

assertThat(product.getDateCreated()).isBetween(instant1, instant2);

});

assertThat(actualProduct)

.isEqualToIgnoringGivenFields(expectedProduct, "id");

assertThat(actualProductList)

.usingElementComparatorIgnoringFields("id")

.containsExactly(expectedProduct1, expectedProduct2);

assertThat(actualProductList)

.extracting(Product::getId)

.containsExactly("1", "2");

Overkill for Corner Case Testing

“I just want to test if

ProductDAO’s methodfindProducts()behaves as expected when I pass the crazy parameter combination XYZ to it. Writing an integration test would be an overkill. It’s so much easier to use unit tests for this.”

I can understand this argument and I’m not dogmatic about this point. But I always recommend trying to write integration tests also for corner cases; especially for corner cases.

- If you already have integration tests with the required wiring and the creation helpers, it’s usually no big deal to add another test. You just reuse what’s already there.

- Moreover, you want to ensure a certain behavior for corner cases. Again, you don’t want to test that

findProduct()returns the correct internal data structure for a crazy parameter combination. You want to test that the user still gets a reasonable response or that no broken data is created in the database. You can only test this with an integration test.

Untestable Features and Conditions

“I can’t test feature X under Condition Y with an integration test.”

After my experience, you can test nearly everything with integration tests most of the time. Try it, you’ll be surprised what you can cover with integration tests. For the rest: unit tests are still allowed. ;-)

“I can’t test if my DAO throws Exception XY or returns the internal data structure AB”

Again, most of the time, you don’t have to. What you actually should test is the behavior that follows a certain exception (no database updates, no subsequent calls to remote systems) and if an internal data structure leads to a certain output or effect.

“I have to mock this HTTP client or DAO class to simulate network failures or service outages by throwing the corresponding exception”

You don’t have to. Libraries like MockWebServer and Testcontainers (via Troxiproxy) allows you to simulate slow networks or unavailable external systems. So you can safely use the real classes in the tests.

We Already Have VM-Based System Tests

“We still have to test if all real services are working together correctly. That’s we deploy all services of our system on a VM and run system tests again it.”

That’s great and those system tests are important. However, after my experiences, those tests tend to be fragile. Only a single mistake in one substream service or some wrong data can break your tests. Debugging can be a nightmare. Moreover, the effort for setting up this test environment is quite high. Plus, the feedback cycle is slow because you have to build and redeploy your service after a change.

The proposed integration tests are portable, fast, easy to set up and reliable. That’s a huge advantage, but the trade-off is that they don’t cover the whole system.

That’s not an “Integration Test”

There is no standard test taxonomy and therefore no common definition of the term “integration test”. I apply the term to the class level and test the integration of multiple classes. Some use the term for testing multiple deployment units in integration (which I would refer to as “system tests”). If you like to avoid this ambiguity, you can use the term “component test” for the proposed testing style.

More Examples

Beside the classical HTTP endpoint integration test above, there are many other use cases.

Button Click in the (Vaadin) UI

Integration tests are even possible for UI tests. You can’t really test the layout and the concrete appearance in the browser, but you can test the business logic without much setup.

- Input: Insert some entities in the database.

- Action: Click on the button programmatically.

- Output: Assert the database state or the shown components in the UI.

If you are using Vaadin, writing those tests is very simple, because Vaadin provides a Java-API for building your Web UIs which can also be using in the tests for assertions. Moreover, the library Karibu-Testing is very handy here.

// Karibu-Testing works much better with Kotlin, but let's stick with Java for this example.

@TestInstance(TestInstance.Lifecycle.PER_CLASS)

public class ProductViewITest {

private JdbcTemplate template;

private ProductView view;

@BeforeAll

public void beforeAll() {

MockVaadin.setup();

PostgreSQLContainer db = new PostgreSQLContainer("postgres:11.2-alpine");

db.start();

DataSource dataSource = DataSourceBuilder.create()

.driverClassName("org.postgresql.Driver")

.username(db.getUsername())

.password(db.getPassword())

.url(db.getJdbcUrl())

.build();

template = new JdbcTemplate(dataSource);

SchemaCreator.createSchema(template);

}

@BeforeEach

public void beforeEach() {

ProductDAO dao = new ProductDAO(template);

view = new ProductView(dao);

}

@Test

public void prodcutsAreCorrectlyDisplayedInTable() {

insertIntoDatabase(

new ProductEntity().setId("90").setName("Envelope"),

new ProductEntity().setId("50").setName("Pen")

);

Button button = _get(view, Button.class, spec -> spec.withText("Load Products"));

_click(button);

Grid<ProductModel> grid = _get(view, Grid.class);

assertThat(GridKt._size(grid)).isEqualTo(2);

assertThat(GridKt._get(grid, 0))

.isEqualTo(new ProductModel().setId("90").setName("Envelope"));

}

}

Complete source code of the ProductViewITest

Background Jobs, Scheduler

Let’s assume we like to test the behavior of a background job (for instance, a method that is annotated with Spring’s @Scheduled annotation).

- Input: Insert some entities in the database.

- Action: Call the annotated method.

- Output: Assert the database state.

The same approach can be applied here and the code looks pretty much like the ProductControllerITest.

Source Code

The complete source can be found on GitHub in the project modern-integration-testing.