Self-Contained Systems in Practice with Vaadin and Feeds

Posted on May 16, 2018. Updated on Jan 20, 2019

The term Microservices is quite vague as it leaves many questions unanswered. Contrarily, a Self-Contained Systems subsumes concrete recommendations and best practices that can guide you to create an application which is resilient and independent. But how can we implement such a system? At Spreadshirt, we build an application following the recommendations of a Self-Contained System. In this post, I’ll show you which technologies we used and which challenges we faced.

TL;DR

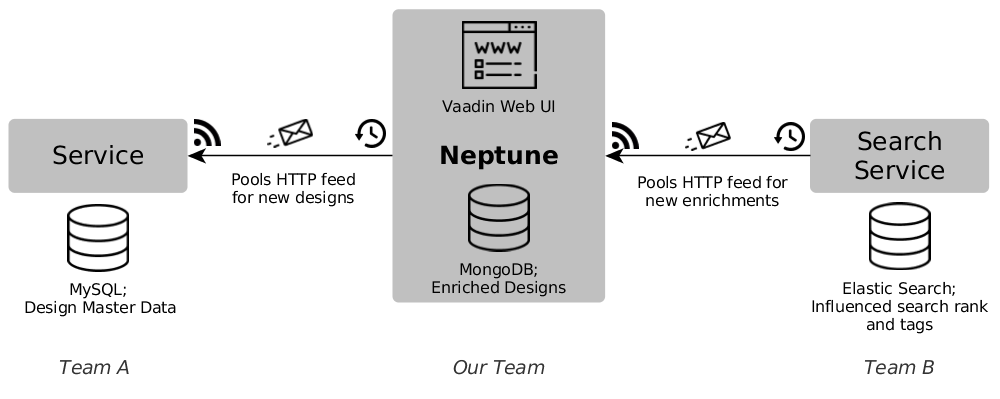

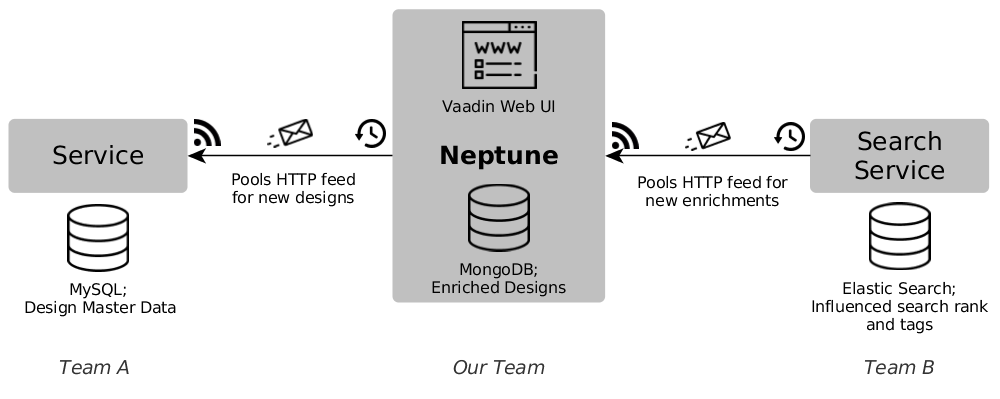

Big Picture of our Self-Contained System ‘Neptune’.

- A Self-Contained System is a kind of microservice but has concrete characteristics. It is a good guideline to create a system that is independent in terms of deployment, development, technology and other systems.

- It should have an own UI and database

- Its primary purpose should be fulfilled without calling other systems. Instead, the database should be used.

- If possible, it communicates asynchronously via events with other systems.

- Neptune is an internal application for design management at Spreadshirt. It conforms with the constraints of an SCS.

- The Web UI is built with Vaadin.

- The events are implemented as an HTTP Feed which is polled periodically.

- We use MonogDB as our database.

- A Self-Contained System combines the advantages of monoliths with the benefits of microservices.

- Advantages of monoliths: reduced distribution, easy operations, easy refactoring, “having everything at hand”

- Benefits of microservices: independent development, technology, and deployment

- Due to the own database and the asynchronous communication, SCS are resilient by design.

- The challenges of our SCS have been the increased implementation effort for publishing and consuming events via feeds, a reliable pagination approach, and the data redundancy.

Self-Contained Systems

The term “microservices” is not officially defined. One pretty wide definition is:

Microservices is a modularization approach aiming at independently deployable units.

This is quite vague and leaves many questions unanswered: Does a UI belong to a microservices? Should a microservice have an own database? What about sharing a database? Should one microservice call another one synchronously? Or should data be replicated asynchronously? Should data redundancy be avoided? Is it ok, for a user request to require subsequent remote calls?

Self-Contained Systems (SCS) answers those questions. They define a set of characteristics leading to an autonomous and loosely coupled application. It’s independent in terms of development, deployment, scalability and in case of outages of other services. Some characteristics are:

- An SCS should contain everything that is required to fulfill the business requirements. This includes an own web UI, the business logic, and the data. Hence, an SCS is a complete vertical.

- So it must have an own database which is not used by other services.

- The main use case of the SCS can be fulfilled without requesting other systems. This means, that the user can browse through the web UI of the SCS without triggering subsequent remote calls to other systems. Instead, the SCS should rely on its database to fulfill its primary purpose.

- The communication with other services should be asynchronous and event-driven.

- The required data is replicated via events into the SCS' database.

- An SCS should be owned by one team.

Architecture and Technologies of our Self-Contained System

Our Self-Contained System is called “Neptune” and is used for design management and enrichment. The important point is, that Neptune is an internal application. So scalability and throughput are not that important.

Our technology stack: Kotlin, Spring Boot, Vaadin, Spring MVC, MongoDB

Let’s see how we applied the SCS constraints to Neptune. Here’s the big picture:

Big Picture of our Self-Contained System ‘Neptune’ and its boundaries.

A Single Deployment Unit and Reduced Distribution

We put every functionality into a single deployment unit: the UI, the database access layer, the client for fetching the master data and our feed. There is no further distribution within our self-contained system.

- Reduced Distribution. This is really useful as it makes the development more productive and the system more robust. Do you need to display some data to the user in the UI or expose it in the feed? Just use the DAO class and access the database directly. That’s it. No HTTP client implementation required. No brittle and slow remote service calls between you and your data. In a world of over-distribution, this is so refreshing.

- Easy operations. Only one component that has to be deployed and monitored (metrics, logs).

- Easy deployment. No need to coordinate the deployments of multiple services (e.g. frontend and backend).

- Refactoring within the self-contained system are straight-forward.

So we get some of the advantages which we know from monoliths. And this is really great! But a self-contained system is not a monolith. An SCS has a focused domain and purpose. It contains only those workflows and domain entities that are required in order to fulfil this goal. The purpose of Neptune is design management and enrichment. Thus we only care for (some) design data and provide a UI only for dealing with designs. In Domain Driven Design, we would call it a bounded context.

Vaadin UI

In an SCS, the UI should be part of the same deployment unit as the business logic. This ensures an easy deployment (no coordination required) and centralizes the domain logic in one component, which in turn simplifies changes.

The concrete UI technologies are not restricted by SCS. Some options are:

- Server-side rendering. For instance with Spring MVC and Thymeleaf. For me, this approach is highly underestimated nowadays.

- A JavaScript SPA which is delivered by the SCS. The SCS provides HTTP endpoints which are called by the SPA.

- Use Vaadin to create an SPA using Java/Kotlin. Under the hood, Vaadin creates a JavaScript SPA for us and takes care of the whole client-server-communication via HTTP. The developer only has to write the server-side code in Java or Kotlin. Changes to the server-side Java objects (representing the UI components) are automatically applied to the DOM in the browser.

We decided to go for Vaadin due to the following reasons:

- Productivity.

- Vaadin provides a powerful widget set and takes care of the client-server-communication and session handling. We don’t have to implement that manually.

- We can stick to our well-known Java ecosystem: libraries, test tools and approaches, coding guidelines, idiomatic code, build tools, deployment, IDEs - everything keeps the same. No need to dive into the JavaScript ecosystem and learn this again. This was a huge advantage for us and should not be underestimated.

- The domain logic is inherently centralized on the server-side. You simply can’t scatter it between the client and the server in Vaadin*. Domain changes only require touching the server-side code. At this point, Vaadin fits pretty well to the SCS approach as we have a single point of maintenance.

- We don’t feel a “technology break” between the UI and the backend code. We can easily access the database via the DAO objects after a browser’s button-pressed-event comes in and change the brower’s UI from the server-side. Everything can be done in the same language and at the same point.

- We can write our UIs in Kotlin. This is a great benefit as Kotlin makes our code safer (immutability, null-safety) and much more concise (data classes, expressions). In fact, we experienced fewer bugs and boilerplate in our code.

But Vaadin is no silver bullet. If high scalability, SEO or fine-grained and direct control over the DOM would have been our first class citizens, we wouldn’t have chosen Vaadin. But as Neptune is an internal application, Vaadin is the right tool for this job.

If you like to read more about Vaadin, check out my Vaadin Evaluation.

Events via HTTP Feeds and Polling

We decided to implement our events as HTTP feeds which are polled regularly. So Neptune polls a feed on another service to retrieve new design master data. If a user marks and labels a design, the created enrichment data is also exposed via a feed by Neptune and offered to interested services. This way, we asynchronously synchronize the data in our platform.

Implementing events with an HTTP feed and clients is more laborious than using a messaging broker like Kafka or ActiveMQ. You have to take more effort into implementing it properly and in a reliable way especially in case of errors. Moreover, the inconsistency gap when polling is usually bigger. But the advantages are that you don’t need any shared infrastructure (like the messaging broker) anymore and you can implement certain configuration (poll frequency, time that an event is kept, reading the complete history) right into the components and control it that way. Also, evolving the payload format and URLs is more easy with the means of HTTP and Hypermedia (see Ensure Evolvability of the API).

We didn’t use standards like Atom for our HTTP Feed. Instead, we used a custom JSON API, which is slightly inspired by json:api. A real challenge was to implement a fast, stateless, reliable and efficient pagination approach. It took us multiple iterations until we ended up with the Timestamp_ID continuation token. Check out my dedicated blog post about this topic.

// GET /api/enrichedDesigns?modifiedSince=1504224000000

{

"data": [

{ "id": 1, ... },

{ "id": 2, ... },

],

"pagination": {

"continuationToken": "1519986384855_5a9926d0bbcfe7175512937f",

"hasNext": true

},

"links": {

"next": "/enrichedDesigns?continuationToken=1519986384855_5a9926d0bbcfe7175512937f"

}

}

// retrieve the next page:

// GET /api/enrichedDesigns?continuationToken=1519986384855_5a9926d0bbcfe7175512937f

Own Database

An own database has the following advantages:

- The user request can be fulfilled without remote calls to other services. This makes Neptune much more independent in case of outages of other services.

- Calling a database is way faster than calling remote services.

- We can optimize the data structure and queries according to our use cases.

- We can choose a database that fits our requirements. We decided to go for MongoDB as its data model fits our domain model, it nicely supports varying entities (because it’s schemaless) and the object mapping is easy (no object-relational impedance mismatch)

- Local transactions (in case of relational databases).

Team Structure

Neptune and each service within its boundaries are owned by different teams. In order to reduce the coordination and communication effort between the team, it’s good to technically decouple the components as far as possible. We did this by communicating only asynchronously via events and following the “share-nothing” approach (no shared database, broker or libraries). All in all, the development of Neptune is independent. Business requirements can usually be implemented without other teams and by touching only Neptune.

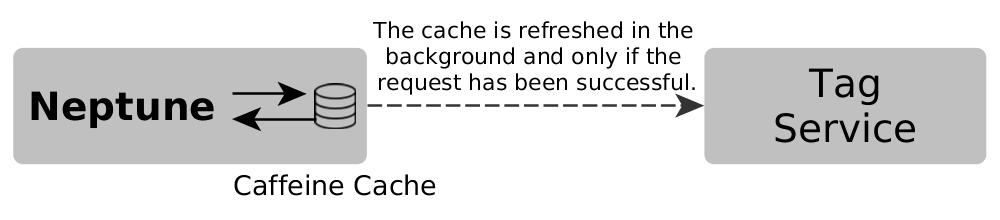

Compromises

As always, there is no rule without an exception. Neptune retrieves also data from another service, but this data is small and changes extremely rarely. So we decided to retrieve them synchronously but keep the data in a Caffeine cache. The coolest thing is the refreshAfterWrite() configuration. If the cache is expired, the cache doesn’t block but returns the expired value. But in the background, it tries to update the cache by calling the service again. If this fails, the old value remains. So we may become outdated, but are still available and responsible. Additionally, to avoid delays for the user, we warm up the cache during the startup of Neptune. This way, we are also resilient against outages of the service while keeping the implementation really simple (no own persistence, no logic for data replication and updating).

A Caffeine cache is used for a better performance and to increase the resilience.

val cache: LoadingCache<String, List<Tag>> = Caffeine.newBuilder()

.refreshAfterWrite(6, TimeUnit.HOURS)

.build { delegate.retrieveTags() }

Resilience without Netflix

What I like about Self-Contained System is the fact that they are resilient by design. You don’t have to throw resilience technology at a distributed system in order to make it resilient. In cases of too many synchronous (blocking) calls, people tend to utilize Netflix Hystrix, Spring Cloud or reactive programming. Don’t get me wrong, these technologies are great and can be very beneficial. But in some situations, the lack of resilience could have been avoided in the first place by an architecture that uses an own database and communicates asynchronously via events.

Challenges

Unfortunately, Self-Contained Systems are no free lunch.

- Increased implementation effort for replicating the data asynchronously. This subsumes writing and testing the feeds, the feed clients, a proper scheduling and a robust retry approach.

- Implementing a reliable and efficient pagination approach for the feeds is non-trivial. See

Timestamp_IDcontinuation token. - Due to the polling of the feed, we usually have a bigger inconsistency gap as we would have with a classical messaging broker. It depends on the domain if this is ok. For us, it was ok.

- We have to accept and deal with data redundancy and eventual consistency.

Did We Plan to Build an SCS?

Honestly, we didn’t set out to build a “Self-Contained System”. It just happened. We were thinking about a good architecture that leads independence in many ways. And we finally came up with this architecture. Afterward, I heard about Self-Contained Systems and listened to Oliver Gierke’s talk “Refactoring to a System of Systems” and realized: “That is exactly what we did with Neptune”. But I suppose I’ve been confirmation-biased. ;-)

Related Posts and Links

- Self-Contained Systems Website

- Talk Refactoring to a System of Systems by Oliver Gierke. Many of the mentioned ideas can be found in a self-contained system. He nicely motivates this kind of architectures but focuses on the backend integration.

- Slides of the talk Self-contained Systems: A Different Approach to Microservices by Eberhard Wolff

- Vaadin wrote a success story about Neptune.

- Related Posts: